Hi! I’m a 3rd year undergraduate Researcher and Engineer at UC Berkeley studying Electrical Engineering and Computer Science (EECS).

I’m broadly interested in Reinforcement Learning and Robot Learning. Currently, I work on Policy Extraction and offline-to-online RL at BAIR, where I’m fortunate to be advised by professor Sergey Levine. I’m also grateful to work on Test-Time methods for RL as part of the inaugural OpenAI launchpad Fellows program. Previously, I’ve worked on RL post-training for VLAs with the GEAR team at NVIDIA, LLM Infra with the ChipNemo team at NVIDIA, and Energy Validation Infra for Robotaxi Charging at Tesla. Outside research, I build machine learning applications with my friends at Cal Launchpad.

If you’d like to chat, please reach out at [andypeng at berkeley dot edu].

Publications

|

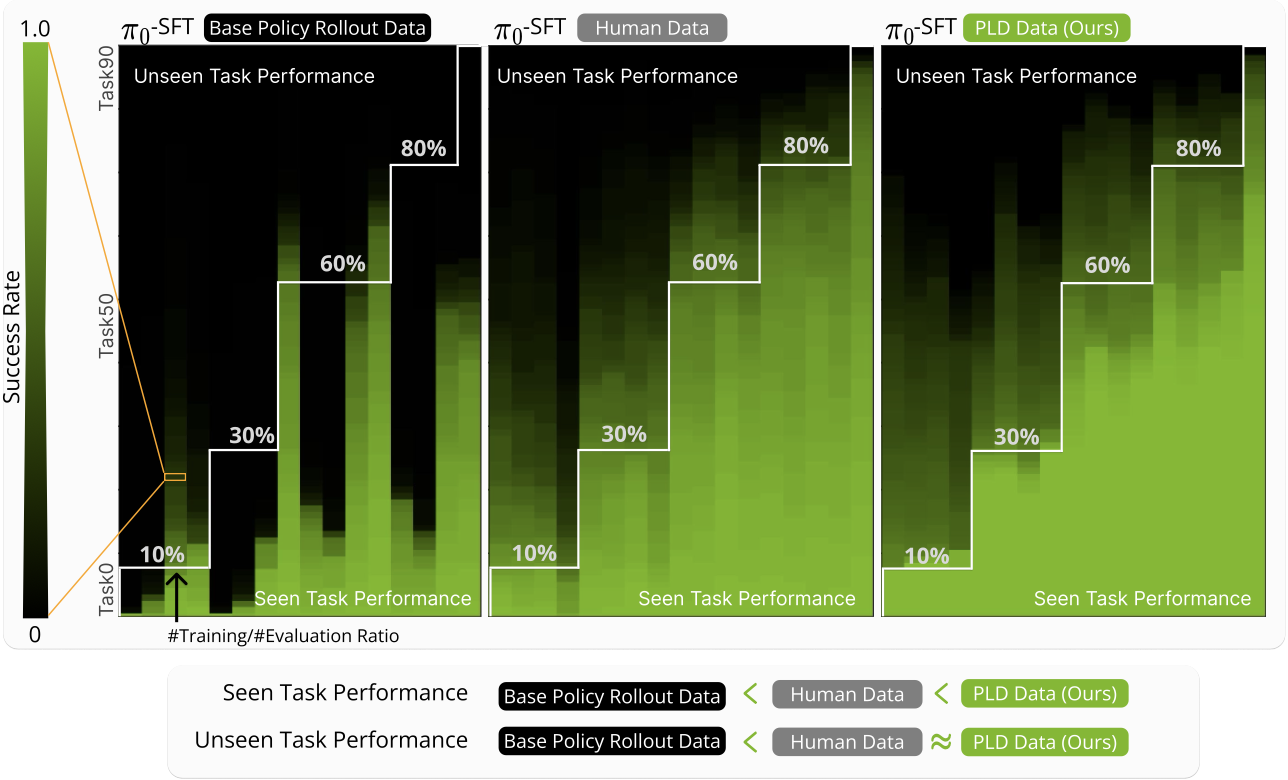

Self-improving Vision-Language-Action models with data generation via Residual RL Wenli Xiao*, Haotian Lin*, Andy Peng, Haoru Xue, Tairan He, Yuqi Xie, Fengyuan Hu, Jimmy Wu, Zhengyi Luo, Linxi "Jim" Fan, Guanya Shi, Yuke Zhu,International Conference on Learning Representations (ICLR), 2026 [paper] [website] |

|

Efficient Online Reinforcement Learning Fine-Tuning Need Not Retain Offline Data Zhiyuan Zhou*, Andy Peng*, Qiyang Li, Sergey Levine, Aviral KumarInternational Conference on Learning Representations (ICLR), 2025 [paper] [website] [code] |